| Table of Contents | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

|

Newer versions of Elasticsearch include advances in speed, security, scalability, and hardware efficiency, and they support newer tool releases. All upgrades from Elasticsearch 2.3.3/5.6.12 to version 6.8.6 are legacy migrations requiring both a new separate Elasticsearch cluster and a new Swarm Search Feed to reindex content into the new ES cluster and format. Upgrade in-place to Elasticsearch 7 as part of the Swarm 12 upgrade once on ES 6.8.6.

Migration Process

...

Given the complexities of converting legacy ES data, the easiest path is to start fresh: provision a new ES cluster (machines or VMs meeting the requirements), install ES, Search, and Swarm Metrics on this cluster, and create a new search feed to this cluster. Swarm continues to support the existing primary feed to the legacy ES cluster while it builds the index data for the new feed. Searching remains available for users. Make the new feed primary, restart the Gateways, and the migration is complete once the new feed has completed indexing. This is an overview of the migration process to Elasticsearch 6:

...

Swarm Requirements |

|

|---|---|

New ES Cluster |

|

| Excerpt | ||

|---|---|---|

| ||

Hidden: This was added, then removed:

|

Migrating to Elasticsearch 6

Follow these steps to migrate to a new Elasticsearch 6 cluster, from which allow upgrades to Swarm 12 and Elasticsearch 7 in-place, retaining the same Search feed and index data. An existing Elasticsearch 2.3.3 or 5.6.12 cluster cannot be upgraded; a new cluster and new Search Feed must be created.

| Info |

|---|

ImportantDo not run different versions of Elasticsearch in the same ES cluster. Verify the new Elasticsearch configuration has a different name for the new cluster; otherwise, the new ES servers join the old ES cluster. |

Set up the new Elasticsearch.

Obtain the Elasticsearch RPMs and Search from the downloaded Swarm bundle.

On each ES server in the newly provisioned cluster install and configure the Elasticsearch components.

Install the RPMs from the bundle.

Code Block yum install elasticsearch-VERSION.rpm yum install caringo-elasticsearch-search-VERSION.noarch.rpm

Complete configuration of Elasticsearch and the environment. See Configuring Elasticsearch.

Set the number of replicas to zero to avoid warnings if using a single-node ES cluster. See Scaling Elasticsearch.The configuration script starts the Elasticsearch service and enables it to start automatically.

Verify the mlockall setting is true. Else, contact DataCore Support.

Code Block curl -XGET "ES_HOST:9200/_nodes/process?pretty"

Verify the

HTTP_PROXYandhttp_proxyenvironment variables are not set if cURL requests do not send an expected response. Implementing a proxy for root users commands causes communication issues between ES nodes if implemented by an IT organization or security policy.Proceed to the next server.

All ES servers are installed and started at this point. Use Swarm UI or one of these methods to verify Elasticsearch is running (the status is yellow or green):

Code Block curl -XGET ES_HOST:9200/_cluster/health systemctl status elasticsearch

Verify the

HTTP_PROXYandhttp_proxyenvironment variables are not set if cURL requests do not send an expected response. Implementing a proxy for root users commands causes communication issues between ES nodes if implemented by an IT organization or security policy.Troubleshooting — Run the status command (

systemctl status elasticsearch)and look at the log entries:/var/log/elasticsearch/CLUSTERNAME.log

Create a search feed for the new ES. Swarm Swarm allows creation of more than one Search feed supporting transition between Elasticsearch clusters.

Create a new search feed pointing to the new Elasticsearch cluster in the Swarm UI. See Managing Feeds.

Verify the

[storage cluster] managementPasswordis set properly in the gateway.cfg file if errors are encountered during feed creation. Correct the value and restart the gateway service if a change is needed.

Continue using the existing primary feed for queries during the transition. The second feed is incomplete until it fully clears the backlog.

Set up Swarm Metrics.

Install Swarm Metrics on one server in the new Elasticsearch cluster (or another machine running RHEL/CentOS 7). See /wiki/spaces/DOCS/pages/2443810001.

(optional) Swarm Metrics includes a script to migrate the historical metrics and content metering data. Proceed with the following steps if preserving the historical chart history is required (such as billing clients based on storage and bandwidth usage):

Add a "whitelist" entry to the new ES server so it trusts the old ES server before running the script.

Edit the config file:

/etc/elasticsearch/elasticsearch.ymlon the destination ES node.Add the whitelist line, using the old ES source node in place of the example:

Code Block language text reindex.remote.whitelist: old-indexer.example.com:9200

Restart Elasticsearch:

systemctl restart elasticsearch

Run the data migration script, specifying the source and destination clusters:

Code Block language bash /usr/share/caringo-elasticsearch-metrics/bin/reindex_metrics -s ES_OLD_SERVER -d ES_NEW_SERVER

Troubleshooting options:

By default, the script includes all metering data (client bandwidth and storage usage). To skip importing this data, add the flag -c.

To force reindexing of all imported data, add the flag

--force-all.

Allow an hour or more for the script to complete if there are a large amount of metrics to convert (many nodes and several months of data).

Run the script again, and repeat until it completes successfully if connection or other problems occur and the screen reports errors.

To see the past metrics, prime the curator by running it with the

-nflag:Code Block language bash /usr/share/caringo-elasticsearch-metrics/bin/metrics_curator -n

Change the

metrics.targetfrom the old ES target to the new ES target. This reconfiguration pushes the new schema to the new ES cluster.

Complete new feed and make primary.

Info title Important Migration is not done until Swarm switches to using the new ES search feed. Because it takes days for a large cluster to reindex the metadata, set calendar reminders to monitor the progress.

On the Swarm UI's Reports > Feeds page, watch for indexing to be done, which is when the feed shows 0 "pending evaluation".

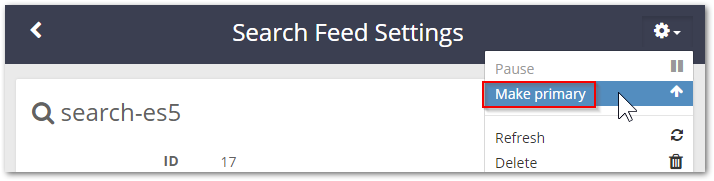

Set Swarm to use the second feed when it is caught up. In the feed's command (gear) menu, select Make Primary.

Install Gateway 7.0, Swarm UI, and Content UI on each Gateway server.

For new Gateway servers, see Gateway Installation.

For Gateway 6.x servers, follow Upgrading Gateway.

For Gateway 5.x servers, follow Upgrading from Gateway 5.x.

Complete post-migration.

Delete the original feed when verifying it is working as the new primary feed target. Having two feeds is for temporary use because every feed incurs cluster activity, even when paused.

As appropriate, decommission the old ES cluster to reclaim those resources.

Upgrade in place to Swarm 12, which includes Elasticsearch 7 when completing this migration and are running Swarm 11.3. See How to Upgrade Swarm.